Before I even start saying anything about keyword research I want to take my hat off to

Richard Baxter because the

tools and methodologies he shared at MozCon make me feel silly for even thinking about bringing something to the Keyword Research table. Now with that said, I have a few ideas about using data sources outside of those that the Search Engines provide to get a sense of what

needs people are looking to fulfill right now. Consider this the first in a series.

Correlation Between Social Media & Search Volume

The biggest problem with the Search Engine-provided keyword research tools is the lag time in data. The web is inherently a real-time channel and in order to capitalize upon that you need to be able to leverage any advantage you can in order to get ahead of the search demand. Although Google Trends will give you data when there are huge breakouts on keywords around current events there is a three-day delay with Google Insights and AdWords only gives you monthly numbers!

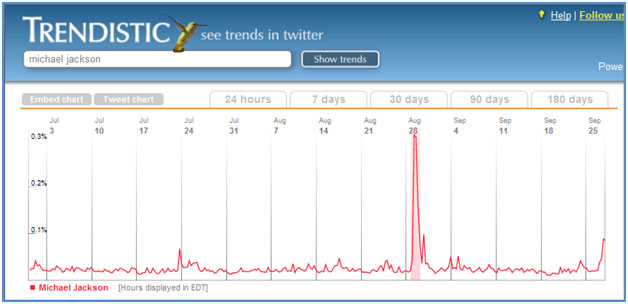

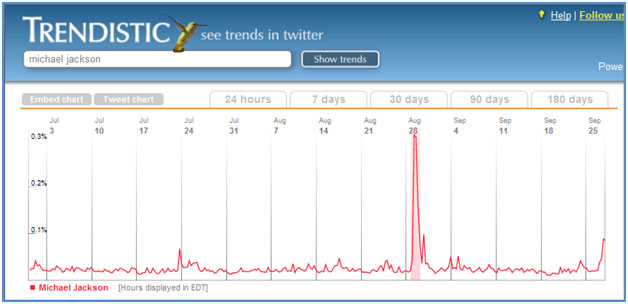

However there is often a very strong correlation between the number of people talking about a given subject or keyword in Social Media and the amount of search volume for that topic. Compare the trend of tweets posted containing the keyword “Michael Jackson” with search volume for the last 90 days.

"Michael Jackson" Tweets

"Michael Jackson" Search Volume

The graphs are pretty close to identical with a huge spike on August 29th which is Michael Jackson’s (and my) birthday. The problem is that given the limitations of tools like Google Trends and Google Insights you may not be able to find this out until September 1st for many keywords and beyond that you may not be able to find complementary long tail terms with search volume.

The insight here is that subjects people are tweeting about are ultimately keywords that people are searching for. The added benefit of using social listening for keyword research that you can also get a good sense of the searcher’s intent to better fulfill their needs.

Due to this correlation social Listening allows you to uncover what topics and keywords will have search demand and what topics are going have a spike in search demand –in real-time.

N-grams

Before we get to the methodology for doing this I have to explain one basic concept –N-grams. An N-gram is a subset of a sequence of length N. In the case of search engines the N is the number of words in a search query. For example (I'm so terrible with gradients):

is a 5-gram. The majority of search queries fall between 2 and 5-grams anything beyond a 5-gram is most likely a long tail keyword that doesn’t have a large enough search volume to warrant content creation.

If this is still unclear check out the Google Books Ngram viewer ; it’s a pretty cool way to get a good idea of what Ngrams are. Also you should check out John Doherty’s Google Analytics Advanced Segments post where talks about how to segment N-grams using RegEx.

Real-Time Keyword Research Methodology

Now that we’ve got the small vocabulary update out of the way let’s talk about how you can do keyword research in real-time. The following methodology was developed by my friend Ron Sansone with some small revisions from me in order to port it into code.

1. Pull all the tweets containing your keyword from Twitter Search within the last hour. This part is pretty straightforward; you want to pull down the most recent portion of the conversation right now in order to extract patterns. Use Topsy for this. If you’re not using Topsy, pulling the last 200 tweets via Twitter is also a good sized data set to use.

2. Identify the top 10 most repeated N-grams ignoring stop words. Here you identify the keywords with the highest (ugh) density. In other words the keywords that are tweeted the most are the ones you are considering for optimization. Be sure to keep this between 2 and 5 N-grams beyond that you most likely not dealing with a large enough search volume to make your efforts worthwhile. Also be sure to exclude stop words so you don’t end up with n-grams like “jackson the” or “has Michael.” Here’s a list of English stop words and Textalyser has an adequate tool for breaking a block of text into N-grams.

3. Check to see if there is already search volume in the Adwords Keyword tool or Google Insights. This process is not just about identifying breakout keywords that aren’t being shown yet in Google Insights but it’s also about identifying keywords with existing search volume that are about to get boost. Therefore you’ll want to check the Search Engine tools to see if any search volume exists in order to prioritize opportunities.

4. Pull the Klout scores of all the users tweeting them. Yeah, yeah I know Klout is a completely arbitrary calculation but you want to know that the people tweeting the keywords have some sort of influence. If you find that a given N-gram has been used many times by a bunch of spammy Twitter profiles then that N-gram is absolutely not useful. Also if you create content around the given term, you’ll know exactly who to send it to.

Methodology Expanded

I expanded on Ron’s methodology by introducing another data source. If you were at SMX East you might have heard me express the love that low budget hustlers (such as myself) have for

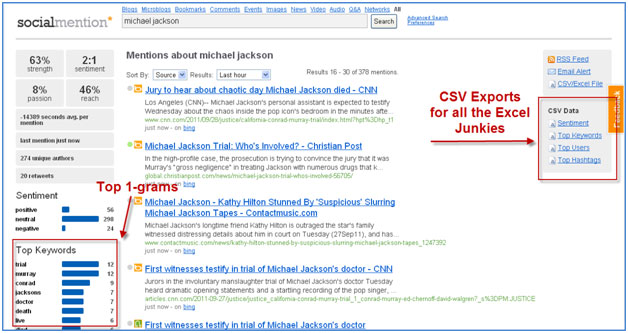

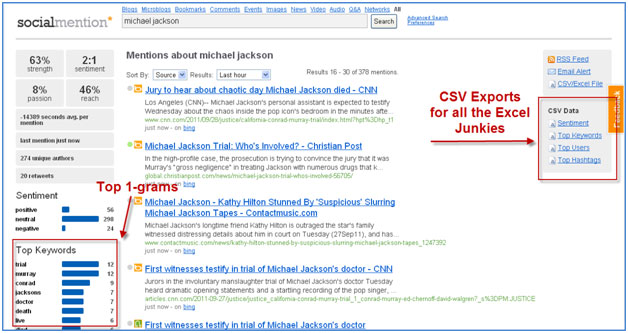

SocialMention. Using SocialMention allows you pull data from up to 100+ social media properties and news sources. Unlike Topsy or Twitter there is an easy CSV/Excel File export and they give you the top 1-grams being used in posts related to that topic. Be sure to exclude images, audio and video from your search results as they are not useful.

"Michael Jackson" Social Mention

"Michael Jackson" Social Mention

So What's the Point?

So what does all of this get me? Well today it got me "michael jackson trial," "jackson trial," "south park" and "heard today." So if I was looking to do some content around Michael Jackson I'd find out what news came to light in court, illustrate the trial and the news in a blog post using South Park characters and fire it off to all the influencers that tweeted about it. Need I say more? You can now easily figure out what type of content would make viral link bait in real-time.

GoFish

So this sounds like a lot of work to get the jump on a few keywords, doesn’t it?

Well I can definitely relate and especially since I am a programmer it’s quite painful for me to do any repetitive task. Seriously am I really going to sit in Excel and remove stop words? No I’m not and neither should you. Whenever a methodology like this pops up the first thing I think is how to automate it. Ladies and gentlemen, I’d like to introduce you to the legendary

GoFish real-time keyword research tool.

I built this from Ron’s methodology and it uses the Topsy, Repustate and SEMRush APIs. When I get some extra time I will include the SocialMention API and hopefully Google will cut the lights back on for my Adwords API as well.

I seriously doubt it will handle the load that comes with being on the front page of SEOmoz as it is only built on 10 proxies and each of these APIs has substantial rate limitations (Topsy – 33k/day, Repustate 50k/month, SEMRush-I’m still not sure) but here it is nonetheless. If anyone wants to donate some AWS instances or a bigger proxy network to me I’ll gladly make this weapons grade. Shout out to

John Murch for letting me borrow some of his secret stash of proxies and shout out to Pete Sena at

Digital Surgeons for making me all-purpose GUI for my tools.

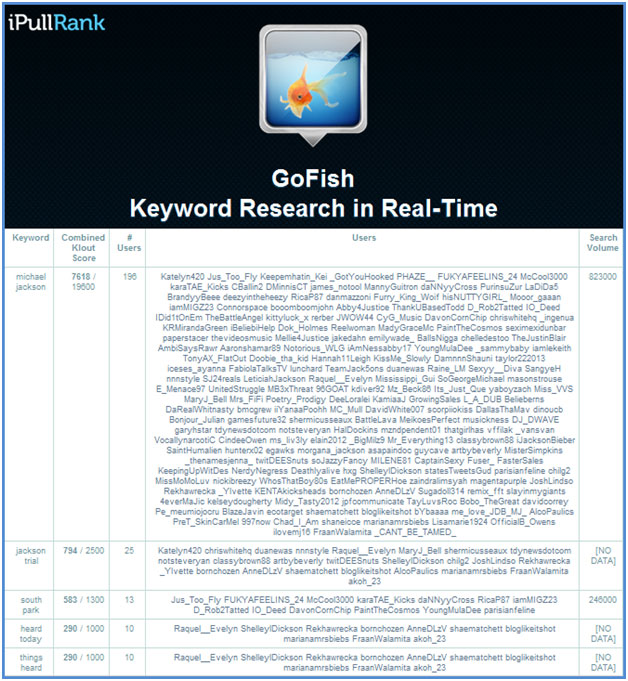

Anyway all you have to is put in your keyword, press the button, wait for a time and voila you get output that looks like this:

The output is the top 10 N-grams, the combined Klout scores of the all users that tweeted the given N-gram vs the highest combined Klout score possible, all of the users in the data set that tweeted them and the search volume if available.

So that's GoFish. Think of it as a work in progress but let me know what features will help you get more out of it.

Until Next Time…

Hi Mike,

as James, I too am correlating data from social and sources for a long while, especially for two reasons:

Trendistic is surely a great tool, and Topsy as well. But for someone like me who works in not US market or, better, in very specific markets geo-localizations of the trends is essential (and this is also a third reason Google Trends especially is many time frustrating).

That's why I use also Trendmap to concentrate my investigation to a specific area, even though - admittedly - it would be even better to use the Twitter APIs and pick up directly with them the trends' locations and, after, the "location" of the Tweeps talking about the trending topic.

Finally - but this is not directly connected but yes side connected - any event lists that can be reduced to an XML source can be scraped in order to plan content production. For instance, if you are in the entertainment industry a wonderful source is IMDB.com and its database. Let say you have to plan the content for a blog: scrape the IMDB database and select 2012/2013 as release year. Then do the same for anniversaries (birth, death, great movies premieres...). Finally combine all this results with what a tool like yours can tell you: is a movie showing already an interesting trend? Prioritize content about it... and so on.

Especifically this use of IMDB was something I was doing already when directing a movie channel, but at that time it was hugely harder without the technology we have now.

Ok, that's all, and pardon me for such a long comment :)

Gianluca Fiorelli don't ask for a pardon, Your comment is a bonus with this article really a fantastic contribution. Actually I was also think that how would we can narrow our investigation towards specific country or a region and you had shared a wonderful trendsmap link.This seems really a fantastic tool to while targeting specific region audience.

And your second tip is also a great one to evaluate the dates for any specific events. As you give us a example of imdb for movie or celebrity, We can do the same for any industry for example SES for SEO industry in the same way we can surf federal sites for national events. Collecting information like these will definitely helps to build a great content which also match up the Internet trends.

Aww, thanks #blushinglikeateen

Gianluca!

How do you:

Awesome insights as always man. I love the IMDB idea and in general just getting creative with the data sources to validate trends. I'm definitely going to have to expand a lot more on this... I have a good one for part 2 that I think you'll love.

As always thanks for reading man!

-Mike

Thanks dude... just one off topic question: how much did you sleep today? 4 hours or less, I suppose, or you are using scheduled tweets because you were still up few hours ago :)

Remember: SEOs also need to rest.

LOL. Nope I don't use scheduled tweets. I just have too much to do to sleep that much.

I have a flight to Dublin tonight, I'll sleep during that. =]

Dublin? Mate you're going to LOVE it there!

Not coming across to London by any chance?

I get out to Dublin every few months. Love it there. I'm actually just there for a day in both directions. I'm headed to the BPM Music Conference in Birmingham. I've never been there before. I gotta get back to London soon though.

Hi, I don't get that IMDB thing...could you please elaborate it little further? Thanks

Yeah! Love the app Mike. I'll add a shout in my webinar tonight.

I like the idea of validating trending SM searches with search volume data - I'd love to see an insights scraper that could validate the rising searches section here: https://www.google.com/insights/search/#q=burton+snowboards&cmpt=q

Anyway, catch you later. I'm going to be doing some skills based stuff (excel, pivot tables etc) and sharing new tools. That's this one included :-)

R

Can't wait to see you in action later, Excel Paganini :)

Glad you like it man!

Scraping the rising searches was actually the first thing I tried to do but it's rendered in JS so I didn't have any luck screen scraping it. Then I went as far as to do some packet sniffing to figure out what URL Google is querying to get the data but I think it was just giving me the left panel of data and not the rising searches side. It also just wasn't sustainable without a bunch of proxies. So what I need to do is get something like HTMLUnit up and running so I can scrape after the JS is rendered.

I built most of this a year ago though when I wasn't up on HTMLUnit so I'll def take another crack at it since people are finding this useful.

I'll def be tuning in because learning some pivot table magic will be helpful for the excel sheets this tool will ultimately be exporting!

-Mike

Google makes it trickier and trickier to scrape, but iMacros actually works pretty well when HTML scrapers don't. It also supports proxies, and typically rotating after every 40-50 results keeps things running smoothly.

Well first I have to say "Bloody Awesome!!". Michael King, your first night bar tab for Mozcon 2012 is on me! :)

I really wasn't intending to use the tool for anything useful ... just had to give it a run and see how it worked, 'cos that's what you do. Right?

Well, things didn't quite go according to plan...but I'm so glad they didn't!

Since I was working on a client's site when I stopped to read your post, I loaded up GoFish and typed in the high volume, massive competition keyword term in the list I had open. The client is a Real Estate company. Yes, I did say Real Estate!

So I really wasn't expecting much, other than seeing how you presented the results...then BAM! There on the screen in front of me were 3 terms I had never known were associated. I Googled them...they seemed to fit. All signs were that this was a sub-niche that my client had never mentioned. This sent me scrambling for my keyword research lists...my client notes, the site brief...nope...they were'nt there.

So after a few hours of waiting for daytime to roll around, I called my client to discover that this is indeed a lucrative sub niche that he forgot to mention!

So here I am, redeveloping pages with a handful of very low competition terms (all between 8% and 20%) that actually yield an extra 8,000 potential searches a month!

So, as Nerds mentioned...this tool is not just useful for building short term strategies, but also for highlighting things that have slipped through the cracks :)

Awesome post Mike!

Keep 'em coming.

Sha

That's a great insight as to how to work with this tool, which I love. The hot topic aspect aside, it can give you an even deeper look into how people actually talk about your topic, which is huge when it comes to content development and searchability.

Thanks!

google insight. Awesome! I never heard of ipullrank.com Nice.

Are you sure there's a 3-day wait with Google Insights? I find it's usually 1 or 2. EG I checked just now (Thursday) and it's showing data for Tuesday (lloking at worldwide search for SEOMOZ, last 7 days).

Good catch. You're right it's typically 2, just goes to show how impatient I am when it comes to data ;)

Phenomenal post Mike, Very useful tool for measure the keywords on realtime. Quite impressive with the charts where we can see the social trends. I think it is require to measure this type of parameters so we can execute our plan accordingly. One thing i like the most is N-Gram concept i think it is kind of keyword structure which is mesured by your tool as you said it's for long tail keyword.

Your last article is amazed about infographics which is one of my favourites. Hope OpenGraph API is done succefully with Josh as you said to me last time and again thanks for the twitter account @techarity. Thumbs Up for you.

Posts like these make me self-conscious of my own guest-post submissions. :P

Really though, excellent work. You mention using Tom's ImportXML tutorial, and that's an excellent resource for grabbing data from the web. I've used his techniques to build a lot of my own SEO tools for "low budget hustlers" as you say.

I feel weird dropping links to myself, but this tutorial will get you Klout scores and usernames for a branded term search: https://www.whitefireseo.com/tools/klout-api-google-docs/

You mention it in your post, so here's an easy way to get that info if your tool lacks the bandwidth, currently!

Your post is awesome Mitch!

Thanks for adding on and don't ever be self-conscious about posts if you're doing stuff as tight as this!

-Mike

Love it Mike...awesome info..keep us in your knowledge loop...:)

I somehow missed this post. Glad I found it. Its great hearing more concepts for efficient keyword research. Didn't know you were a programmer either - Love how you think. I'll take automation over repitition any day!!

Would it be safe to assume that this would be a good way to cultivate potential followers for your Twitter account since they appear to be tweeting about topics/products relevant to your website?

Absolutely. That's a great insight that I hadn't even thought about.

I find it to be one of the most powerful aspects of this tool. Great stuff and thanks again! I've been passing this tool around to my circles/networks all morning.

To continue down the twitter-cultivation road... if there was a way to sort out the retrieved profiles by their Klout score, one might be able to narrow down the most relevant profiles to follow/get-followers-from. Just sayin'...could save a little time. Thanks again!

Yep it collects that data, I'm just trying to think of a cool way to display it. I'm thinking some sort of expandable drop down with all the users and their klout scores next to them.

That sounds like a cool idea Michael. A nice interface to clearly view the data.

Followerwonk is a better way of harvesting that stuff mate....

That's a good tool too!

Although I think iPullRank's data is interesting because it's based on keywords they are actually tweeting within the past...and not just what's in their profile/bio.

This way you get a constant, changing stream of potential followers/customers.

In what regard? Also Followerwonk doesn't have an API. I try to keep scraping to the a minimum since it breaks so often.

Good info, but now I feel like we all need to see this Michael Jackson story using South Park characters *_*

N-Grams theory is very new for me. I come to know it first time over here. There are really interesting tools available to drill down trends. It will make keyword research more insightful for me. That's for sure. Really great work and unique sharing about social media use.

I read all the blog and vital information is presented . Now a days this relation is necessary because with out this relation business and other entities can not survive . This information and topic is very important. Thanks for sharing this information.

Real nice - if you are utilizing a cache I'll bet you won't need much more hardware. Maybe many of the search terms will be the same.

True but the results can potentially change very quickly.

Great post. Thanks

awesome post Mitch ! this tool is helpful thanks for sharing

I hadn't heard of the go-fish keyword research tool. Am off to investigate it right now!

I did enjoy this page, commented and forgot to thumb up, so I've just added one now thanks to today's article.

Thanks for sharing.

In addition to the other seo values of social media. I like social because it provieds an amazing "testing ground" if people like something on social media, they will like it everywhere. I use social media to test future campagin ideas, the way I word things, and even what time of day or week I relese things.

Yes, social tends to provide short term sucsess, but there are ways to translate that into long term value. Go social!

Thanks for sharing Go Fish. Sorry we exceeded you API limits, but like you said, you made the front page of SEOmoz.

Perfect example of the relationship between social media and SEO. This whole concept, in theory, sounds complex but you dumbed it down well. I like the "real time" viral content but how long can that last? A day or two at most? If there was a way to determine a topic that could last 5 - 7 days and you do a really awesome infographic I could only imagine the links you could get.

P.S. Please write a blog post illistrating the Michael Jackson trial with South Park characters!

great post i reallly like real time keyword research methodlogy .quite impressive post with lots of information .

Very nice explanation about n-grams. I will have to use this strategy to stay on top of the breaking trends. Thanks for sharing.

Ops I am late here and the i am sure that the reason why your tool stops responding is due to the large amount of traffic… (My bad luck!)

I am really impressed with the overall idea of correlation social with search demand. We usually talk about viral content and I know many people got miserably fail in creating viral content… Although their content was amazing but it doesn’t reach to the wider audience even when everything seems correct… I think this identifies the factor of trend (time when people are actually looking for!)

This idea will allow people to explore content opportunities and cater the eye balls of the wider audience!

Another great piece Mike!

"low budget hustlers" - love it

I *think* he was talking about me ;)

Good Post But still social Media has the tempaory traffic which normally not measured for provin at client is well.

completely agree with this statement. It's great influence, but doesnt necessarily have the longevity.

That's literally one of my favorite topics, i was always having the regret to be lagging about the trend prediction and keyword research but the real time analysis , is simply awesome. and I am eagerly waiting for your keyword tools more developed version.. and the N-Gram concept is very useful in the keyword research.

Mike, this is a really useful tool you've put together. I tested it out for one of my keywords and it brought some unique perspective to my mind based on what's going on. For instance, I typed tablet computers, and I got keywords related to Amazon's Kindle, Apple's cutting down of its orders for parts. Great stuff for potential linkbait, tweetable content, etc...

edit: I'm almost averse to sharing it since I don't want it to max out your API calls!

Dan over at SEOGadget has a similar tool in Google Docs: https://seogadget.co.uk/using-google-docs-to-generate-hot-content-strategies/ check it out!

You got a shout out from Richard Baxter this afternoon... must tell you something about your work, right?

Great post, great stuff.

I love the new Gofish tool btw. Also the tip about blending the information (southpark characters and MJ) provided a great bit of inspiration. Great post!

Good post, I have been correlating data from social mentions and sources to assist keyword research for a while and also to benchmark.

Only thing I find is that the data set that social mention provides may not be the best for the Australian market for example, hence why paid providers of social anlaytics and mentions software are better for day to day analysis.

Another thing you need to be cautions of are your GEO focused terms if you deal with global brands compared to market specific brands the data can easily get skewed out.

But overall the best thing to do is test and keen testing too see what works effectively.

"figure out what type of content would make viral link bait in real-time."

That right there is probably the Holy Grail of social SEO. I think if you could get ahead of the curve when it comes to trending topics, you'd have a better chance of getting the links. You have to keep in mind that bigger sites are going to get most of the link love, even if they aren't the first ones to write about a certain topic.

"You have to keep in mind that bigger sites are going to get most of the link love, even if they aren't the first ones to write about a certain topic."

This is not necessarilly true. It does often happen but super awesome content can in fact trump brand content and you can also ride the wave of the QDF algo if you get enough saturation.

Really awesome little tool, iPullRank, and clever thinking about combining it all together. Thank you for sharing :)